OLS with Heteroscedasticity

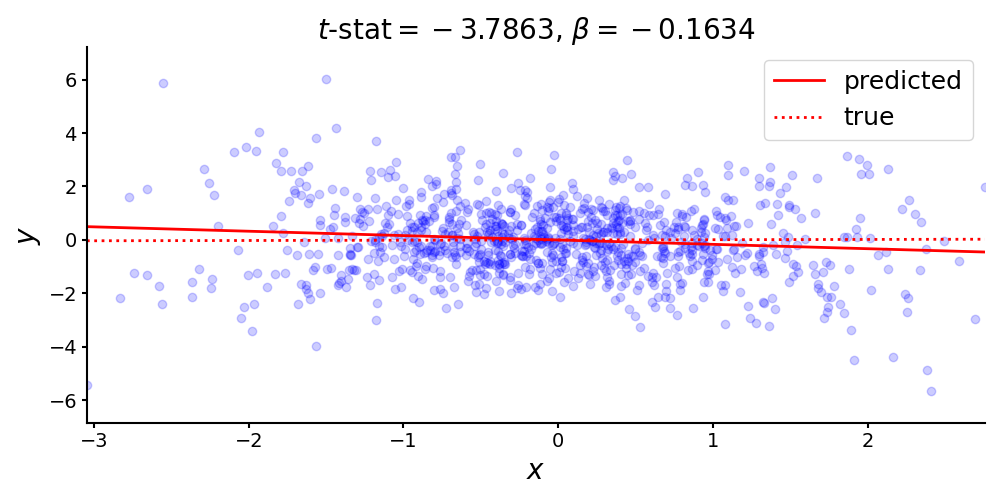

The ordinary least squares estimator is inefficient when the homoscedasticity assumption does not hold. I provide a simple example of a nonsensical

Consider this linear relationship with true coefficient

from numpy.random import RandomState

rng = RandomState(seed=0)

x = rng.randn(1000)

beta = 0.01

noise = rng.randn(1000) + x*rng.randn(1000)

y = beta * x + noise

Let’s fit ordinary least squares (OLS) to these data and look at the estimated

import statsmodels.api as sm

ols = sm.OLS(y, x).fit()

print(f'{ols.params[0]:.4f}') # beta : -0.1634

print(f'{ols.tvalues[0]:.4f}') # t-stat: -3.7863

Remarkably, we see that the sign of the coefficient is wrong, yet the

noise = rng.randn(1000) + x*rng.randn(1000)

Each element in the vector noise is a function of its corresponding element in x. This breaks an assumption in OLS and results in incorrectly computed

Non-spherical errors

Recall the OLS model,

where

In words, all noise terms

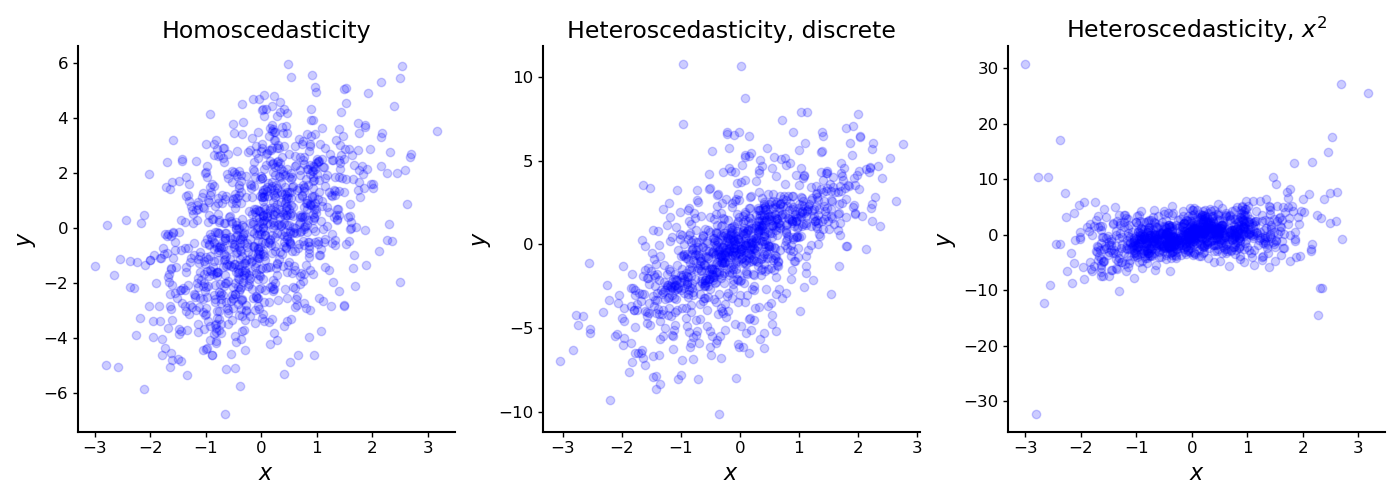

In statistics, scedasticity refers to the distribution of the error terms. OLS assumes homoscedasticity, which means that each sample’s error term has a constant variance (

There are multiple forms of heteroscedasticity. Consider, for example, data which can be partitioned into distinct groups. These types of data may exhibit discrete heteroscedasticity, since each group may have its own variance (Figure

Alternatively, consider data in which each datum’s variance depends on a single predictor. These data may exhibit heteroscedasticity along that dimension (Figure

A second challenge for OLS is when the error terms are correlated, inducing autocorrelation in the observations. Then Equation

We could, of course, have both heteroscedasticity and autocorrelation, in which case the diagonal in Equation

Incorrect estimation with OLS

So what happens when we apply classic OLS to data with variance

instead of spherical errors. Here,

is still unbiased, even with a generalized noise term:

This holds because we still assume that

However, despite still being unbiased, the OLS estimator still has a problem. Deriving the OLS variance,

requires assuming homoscedasticity (see Equation

This follows immediately from plugging in

The second problem is that

We can now see that this is why we estimated incorrect

where

Asymptotic behavior

Finally, let’s consider the asymptotic behavior of the OLS estimator. A standard assumption is that

for some positive definite matrix

However, notice that

for some matrix

But we cannot simplify the covariance matrix, and

Summary

In short, without homoscedasticity, the nice properties of the OLS estimator do not hold, and inference using OLS on heteroscedastic data will be incorrect. There are a few solutions to this problem. The first is to use generalized least squares (GLS) if we do know the variance. This is because GLS is the best linear unbiased estimator when assuming generalized error terms. Another approach is to still use OLS but to use heteroscedasticity-robust standard errors instead of the usual standard errors. Finally, in some situations, we can use weighted least squares. I will discuss these topics in more detail in future posts.